ResNet architecture. Residual blocks.

Tags:

CV

|

feature extractor

Main idea

Main idea of ResNet is use residual blocks or “building” blocks of this network. For example exist “ideal” feature map out_feature_map, instead approach to out_feature_map as this make simple CNN(like VGG):

cnn_out_feature_map = CNN_block_fisrt(inp_feature_map)

ResNet get out_feature_map in the following way:

out_feature_map = RES_block_first(inp_feature_map) + inp_feature_map

Where the output of RES_block_first is residual, thus we approach to out_feature_map using residuals of res. blocks. Each residual block uses a skip connection or shortcut. My intuitive interpretation: association with gradient boosting on trees, alike each tree in GBT generate tails, res. blocks generate residuals. Also, shortcuts solve the problem of vanishing gradients(during backprop, gradient come through shortcut, bypassing many conv layers which may decrease gradient).

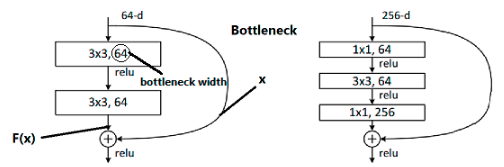

Residual block or bottleneck

On figure A a main building block of this net, bottleneck, there are 2 types for small and more largest ResNet’s. Shortcut performing a identity mapping function or f(x) = x, in implementations used CONV+BN+ReLU.

Figure A.

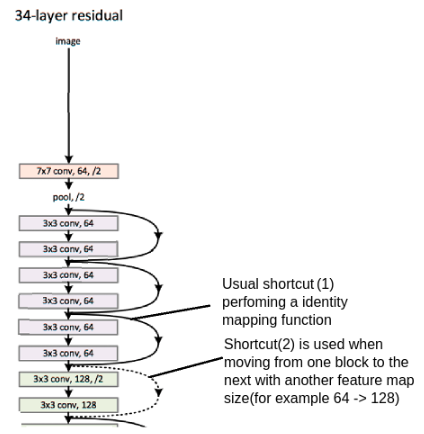

Example of Resnet34 architecture on image B, various colors are marked blocks with different size of feature map. ResNet use 2 types of shortcut:

- Shortcut 1, just perform identity mapping function, between blocks with same feature map’s size.

- Shortcut 2, using when need change feature map size, can change it in 2 different ways:

- Non-parametrical, using pooling 1x1/2, but it not change num. of channels, for this add(in 18, 34 architectures) zero feature maps or delete(other architectures) feature maps;

- Parametreical, it best[1], using conv 1x1/2 it increase(18/34) or decrease(other architectures) number of channels

Figure B.

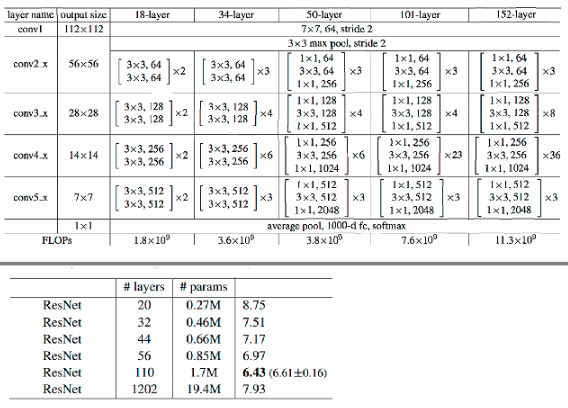

Resnet architecture

On figure B(top) a plot resnet architectures, some convolution layers have ‘same’ padding(HxW of input = HxW of output), size of input feature maps compute based on size of input image - 224x224. Bottom table show number of parameters for architecture with different count of layers.

Figure C.

Links